In the world of ecommerce, every email you send has a job to do. Whether it’s pulling in a sale, reactivating a lapsed buyer, or boosting average order value, your emails need to earn their keep. That’s where A/B testing becomes a powerful optimization tool—not just for improving open or click rates, but for compounding email-driven revenue over time.

What Is A/B Testing in Email Marketing?

A/B testing (also known as split testing) refers to sending two or more variations of an email to different segments of your list to determine which version performs better. You isolate a single element—like a subject line, headline, call-to-action (CTA), or send time—and evaluate which change leads to improved performance on a specific metric.

This process isn’t guesswork. It’s about letting data make the decision for you. And for ecommerce brands, small wins in open rates or conversions can stack up into real improvements in ROI.

Why It Matters for eCommerce Email Strategy

Your emails aren’t just content. They’re revenue drivers. Running A/B tests allows ecommerce brands to:

- Increase engagement by learning what catches a subscriber’s attention

- Boost conversions by refining CTAs, offer formats, or product placements

- Optimize campaign timing based on recipient behavior

- Personalize effectively using data-driven insights (like zero party data) to shape messaging

Testing isn’t just about “better emails.” It’s about better business decisions.

How A/B Testing Works in Email Campaigns

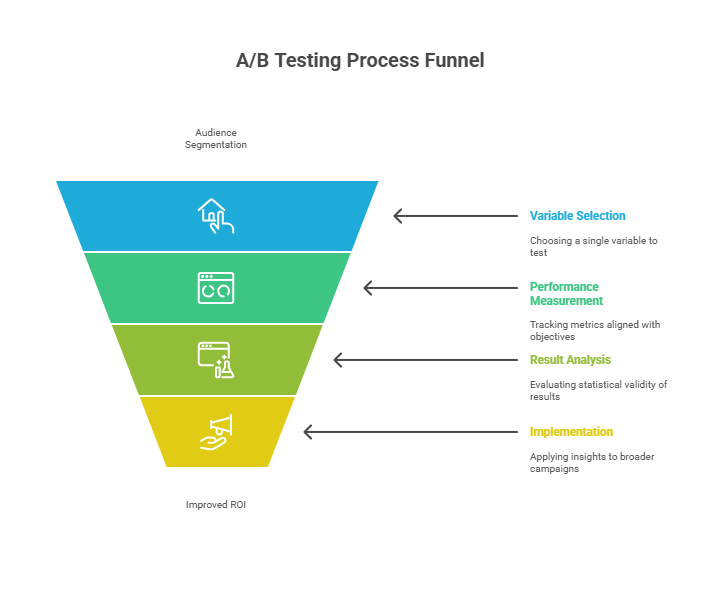

1. Segment and Split Your Audience

You divide a relevant portion of your email list into at least two random, non-overlapping groups of similar size. One group sees version A. The other sees version B.

Important: This group must be large enough to deliver statistically sound conclusions (more on that later in the series).

2. Change One Key Variable

To see the true impact of the tested variable, you test only one component at a time. That might be:

- Subject line (e.g. urgency vs. curiosity tone)

- CTA button text (e.g. “Shop Now” vs. “Reveal My Offer”)

- Layout structure or image hierarchy

Changing multiple components at once obscures what actually caused a difference in performance.

3. Measure Performance on Specific Metrics

Once the test is live, track performance using metrics aligned with your objective. For example:

- Open rate if you’re testing subject lines

- Click rate for CTA or layout tests

- Conversion rate when measuring revenue impact of offer framing

Once the test concludes and produces a statistically valid result, you roll out the better-performing version to the broader list—or use learnings to shape future campaigns.

This isn’t a one-time trick. Over time, regular A/B testing helps you stop guessing and start sending with confidence. It builds a testing culture that compounds insights into higher ROI across your email program.

Identifying Key Goals and Metrics

Your A/B tests are only as strong as the goals behind them. Before you send a single variation, you need clarity on what success looks like. That starts with aligning your test to a specific outcome that maps directly to revenue generation.

Set Campaign Objectives That Matter

Not every test will increase bottom-line revenue overnight, but every test should support a step in the buyer journey. Define goals that are measurable and meaningful within the scope of your ecommerce email program.

- Open rate: Focuses on subject line and sender name effectiveness

- Click-through rate (CTR): Assesses layout, copy, CTA, and content relevance

- Conversion rate: Evaluates product interest and offer response once someone lands onsite

- AOV or revenue-per-email: Measures the true financial impact of your changes

Each test should be tied to one of these conversion points—not just a vanity metric.

Track the Right Metrics to Improve ROI

Improving return on investment means connecting email performance with business outcomes. These are the foundational metrics to monitor during and after an A/B test:

- Open Rate: Percentage of recipients who opened the email. Useful for testing subject line variations.

- Click-Through Rate: Percentage of recipients who clicked any tracked link in the email. Key for testing CTAs, copy, buttons, and visuals.

- Conversion Rate: Percentage of users who completed a purchase or other goal action. Critical for testing offer language, urgency, and product displays.

- Revenue per Recipient (RPR): Total revenue generated from the email, divided by the number of recipients. Tells you which version delivers actual return.

- List Health Metrics: Includes unsubscribe rate, spam complaints, and bounce rates. Monitor these to ensure your test isn’t driving negative consequences.

Match Test Metric to Business Goal

Each A/B test should answer one question at a time. Here’s how to align your selected metric with the right objective:

- Trying to increase traffic? Measure click-through rate.

- Want higher conversion? Track purchases or revenue-per-email.

- Need better inbox performance? Focus on open rates and engagement signals.

Clarity is the difference between testing for insight and testing for noise.

Baseline Before You Test

Before launching any experiment, establish your standard performance for each key metric. That way, you’ll know if a test outcome is truly better—or just average fluctuation.

Your measurement plan should be in place before the test starts, not after the results roll in.

Targeting ROI improvement in email doesn’t just mean running more A/B tests. It means testing with purpose, tracking with precision, and making sure every metric you monitor ladders back to profitability.

Preparing Your Email Marketing Campaign for A/B Testing

A successful test starts long before you hit send. If you skip key steps in setup, your test results will be weak, inconclusive, or even misleading. Before launching your first A/B split, focus on these foundational steps to ensure each email test delivers real, usable insight.

Segment Your Email List First

A/B testing only works when you compare apples to apples. That means your test groups need to be made up of relevant, similar subscribers who share key characteristics. Like buying stage, past behavior, or engagement patterns.

- Use RFM segmentation to group subscribers by recency, frequency, and monetary value

- Filter by status (e.g., active buyers vs. window shoppers)

- Incorporate zero party data to reflect purchase intent or collected preferences

Testing on your entire list rarely produces clean data. Segment first to target where results matter most.

Establish Baseline Data

Before testing, you need a clear snapshot of how your current emails perform. This gives you a yardstick for evaluating changes.

Collect historical performance for each relevant metric, such as:

- Average open rate

- Click-through rate (CTR)

- Conversion rate or revenue-per-recipient (RPR)

Baseline data helps you determine whether a test result is a true improvement—or just noise.

Ensure Tracking Infrastructure Is Set Up

Your email platform must be properly configured to capture the right data. Before running any test, confirm that the following are in place:

- UTM tags or equivalent tracking parameters on all email links

- Event tracking for conversions (e.g., add to cart, purchase)

- Email platform analytics enabled and connected to ecommerce store

Without reliable tracking, your results won’t be actionable. Validate tracking before you test—not after.

Test One Variable at a Time

To know what caused a change in performance, you must isolate a single variable. Testing multiple things at once, like a new subject line and a new layout, creates guesswork instead of clarity.

Examples of testable single variables include:

- Subject line wording

- CTA button color or text

- Image placement or presence

Focus on one change per test so you can draw reliable conclusions and avoid false positives.

Checklist Before Launching Your A/B Test

- Segments are clearly defined and representative of your intended target group

- Baseline performance metrics are documented and accessible

- Tracking mechanisms are tested and confirmed accurate

- Only one test variable is in play for this experiment

Start clean, track accurately, and isolate impact. Those are the prerequisites for data you can trust.

Essential Elements to Test in Email Campaigns

Not all changes lead to profit. But some will. To find them, you need to test the right elements inside your emails. Every email has multiple components that influence performance, from subject line to CTA button. When tested systematically, each offers a low-risk opportunity to improve engagement and drive conversions that matter.

1. Subject Lines

The subject line is your invite. It determines whether your email is ever seen. Small changes here can lead to significant swings in open rates.

- Tone: Friendly vs. urgent vs. curious

- Length: Short and punchy vs. more descriptive

- Personalization: Using first name, preferences, or interests

- Emojis: Included or left out

Test subject lines when your goal is to lift open rates and inbox engagement.

2. Send Times

Timing isn’t just logistics. It’s strategy. The hour and day you send can influence how likely someone is to open or act.

- Morning vs. evening

- Weekday vs. weekend

- Immediate send vs. behavior-based timing

Pro Tip: Use RFM data and engagement history to shape send time hypotheses before testing.

3. Layout and Structure

How your content is presented matters as much as what it says. Clean designs typically outperform noisy ones, but different audiences respond to different layouts.

- Single-column vs. multi-column formats

- Hero images placed at top vs. mid-email

- Navigation links included or removed

Test layouts when aiming to improve click-through rates or guide attention toward a specific product or offer.

4. Call-to-Action (CTA)

Your CTA is the moment of decision. It needs to be seen, understood, and compelling.

- Text: “Buy Now” vs. “See What’s Inside”

- Color and size: High contrast vs. blended buttons

- Placement: Above the fold vs. after product details

CTA tests are some of the clearest to measure. They often directly impact click-through and conversion rates.

5. Visuals and Images

Good imagery does more than decorate. It drives emotion and behavior. That includes product photos, lifestyle shots, and even iconography.

- Static vs. animated images

- Number of images included

- Image captions or overlays used

If visual content differs across variants, keep all other variables stable so results remain credible.

6. Personalization and Dynamic Content

Zero party data lets you tailor emails at scale. The key is knowing how and where to use it effectively.

- Name and location callouts: “Hey, Sarah in Austin” vs. generic greeting

- Product recommendations: Based on past views or purchases

- Preference-based offers: Tailoring discounts to declared interests

Test personalization tactics when you want to improve conversion rates or deepen engagement with existing buyers.

Plan Tests Around What You Can Act On

Every one of these elements can be tested. But that doesn’t mean they all should be at once. Choose based on:

- Your campaign goal (e.g., open vs. conversion)

- What you can learn and repeat across other campaigns

- Ease of implementation for broader rollout

Test what you can consistently improve, not what’s difficult to scale.

Testing Template: What to Try and Measure

| Email Element | Variation to Test | Metric to Track |

|---|---|---|

| Subject Line | Urgent vs. friendly tone | Open Rate |

| Send Time | 8 AM vs. 8 PM | Open + Click Rate |

| Layout | Hero image top vs. middle | Click-through Rate |

| CTA | “Shop Now” vs. “Reveal My Deal” | Click-through and Conversion Rate |

| Personalization | Recommendations based on behavior vs. generic offer | Revenue per Email |

Don’t rely on assumptions. Let your subscribers’ behavior surface the answers. That’s how small tweaks compound into real return over time.

Designing and Running Your A/B Tests

Good testing starts with deliberate design, not random guessing. Each A/B test you run should be built on a clear hypothesis, a defined audience segment, and tracking that supports statistical reliability. Follow this step-by-step framework to structure email experiments that produce real, revenue-driving insight. Not just surface-level lifts.

Step 1: Form a Hypothesis

A well-formed hypothesis keeps your test focused and actionable. It should clearly state what you’re changing, what you expect to happen, and how you’ll measure the outcome.

Use this format to craft your hypothesis:

- If we change [single element],

- then [expected outcome will occur],

- as measured by [primary metric].

For example: If we add product recommendations based on previous purchases, then conversion rate will increase, as measured by completed checkout events.

Limit your test to one element and one expected behavior. Vague or multi-variable hypotheses produce noise, not insight.

Step 2: Define Your Test Group Size

Sample size directly affects test validity. Send your test to too few people, and you won’t know if a result is meaningful or just random variation. Send it to too many without clear winning criteria, and you risk wasting reach on underperforming versions.

Use this framework to shape your group sizes:

- Minimum sample threshold: Only test if you expect at least [insert minimum #] recipients per version

- Split ratio: Standard A/B splits are 50/50, but you can allocate more traffic to a winning version once significance is reached

- Control variables: Audience segmentation, email send time, and external conditions must be consistent across both groups

If a test is too small to reach significance, rerun it across multiple sends rather than interpret weak data.

Step 3: Set the Test Duration

End your test only when you have conclusive data, not when it “feels done.” Most email engagement happens within the first [insert time window], but allow enough time to capture lagging behavior (such as revenue from post-click conversions).

- Typical duration: Allow at least [insert standard window, e.g. 24-72 hours] for test engagement to stabilize

- Peak decision point: Don’t declare a winner until engagement plateaus and you’ve confirmed sufficient sample size and statistical significance

- Test cutoff rules: Predefine the exact point at which you’ll end the test and declare a winner (example: 95% confidence level or threshold revenue difference)

Never end a test early based only on partial open or click rates. Those early signals can be misleading.

Step 4: Choose the Right Testing Tool

You don’t need to cobble together spreadsheets and guesswork. Most email marketing platforms include built-in A/B testing tools that simplify execution and analysis. Choose one that supports the level of granularity and segmentation you need.

Email platforms with A/B testing features usually include:

- Automated sample splitting and traffic balancing

- Real-time performance dashboards

- Statistical significance calculations

- Auto-send functionality for deploying the winner to the rest of your list

- Integration with ecommerce and analytics platforms

Before starting, confirm your tool allows you to:

- Run tests on segmented groups

- Track all relevant engagement and conversion events

- Customize traffic splits and sample sizes per campaign

Your tools should match not just your testing needs, but your ability to act on the data.

Step 5: Document Everything

Consistent documentation is what turns a single test into an ongoing improvement process. Capture every detail of the test, including:

- Date, time, and duration

- Audience segment and size

- Hypothesis and test variable

- Versions tested (copy, creative, etc.)

- Results by performance metric

- Traffic sources and potential external variables

Create a repeatable format or spreadsheet to record your tests. This becomes your internal library of what’s been tried, what worked, and what didn’t, so you don’t repeat failed ideas or overlook successful patterns.

Test Workflow Template

| Step | Task | Output |

|---|---|---|

| 1 | Craft hypothesis | Document expected outcome + metric |

| 2 | Segment audience | Two equal random subgroups from target segment |

| 3 | Build variations A and B | Previewed and validated within platform |

| 4 | Schedule test duration | Predefined timeline with cutoff criteria |

| 5 | Monitor performance | Track in-platform reports for key metrics |

| 6 | Decide and deploy winner | Rollout to broader audience or apply to next campaign |

| 7 | Document and log results | Store in test repository for future reference |

Future-Proof Your Testing

Testing is not a one-and-done tactic. A rigorous A/B testing process gives your ecommerce brand a real advantage. One built not just on creativity, but on compounding knowledge. Each email becomes smarter than the last.

Build your tests to serve your next campaign as much as your current one.

Analyzing Test Results and Making Data-Driven Decisions

This is where the real ROI lives. Running A/B tests means little if you can’t interpret the results correctly or act on what they tell you. Turning data into decisions requires a structured approach to analysis. One that goes beyond “Which version clicked higher?” You need to identify statistically valid patterns, avoid false wins, and translate insights into actions you can repeat across campaigns.

1. Read the Data Through the Lens of Your Hypothesis

Start by revisiting your original hypothesis and primary metric. If your test was based on increasing click-through rates with a new CTA, don’t get distracted by open rate fluctuations or unsubscribes. Focus analysis on the metric directly tied to your test objective.

- Only the selected metric determines the winner.

- Secondary metrics can support your interpretation but shouldn’t guide the decision.

- Resist re-scoping the test afterward to fit better-performing metrics. That’s retroactive bias, not optimization.

Clarity on your goal makes analysis straightforward and aligned with business value.

2. Confirm That Results Are Statistically Significant

Without statistical significance, data is just noise. A difference in performance only matters if it isn’t due to chance.

- Statistical significance means there’s a high probability that the observed difference is real.

- Confidence threshold: 95% is standard for declaring a winning version.

- Sample size matters: Small groups usually cannot deliver statistically valid conclusions.

Most email platforms provide tools for determining confidence levels. If yours doesn’t, plug campaign data into a calculator with criteria like conversion count, group size, and performance delta.

Never pick a winner based on early open rate spikes or small percentage gains. Significant doesn’t mean “slightly better.” It means “statistically dependable.”

3. Watch for Fluctuation Pitfalls

Even well-executed tests can deliver misleading signals if viewed too early or without context. Here’s where to stay cautious:

- Early engagement bias: Initial open or click-through rates often overrepresent hyper-engaged users. Don’t decide a winner too soon.

- Time-based anomalies: Promotional windows, day-of-week behavior, or external events can skew behavioral data.

- Cross-test conflicts: Avoid running overlapping tests targeting the same audience segments. They can contaminate results.

Build a habit of validating conclusions against known benchmarks and campaign context.

4. Make a Confident, Single Decision

Once significance is confirmed, the next step is execution.

- Declare a winner: The version that outperformed the test metric with statistical confidence.

- Roll it out: Deploy the winning version to the remaining subscribers or apply the insight to your next campaign.

- Log it: Capture what was tested, why it worked, and how it will influence future strategy.

If results are inconclusive, don’t try to force a decision. Either extend the test with a larger sample or iterate with revised variables.

Not every test delivers a win, but every test should deliver clarity.

5. Convert Insights Into Long-Term Strategy

One test is a tactic. A pattern is a strategy. Your goal isn’t to win every A/B test. It’s to uncover scalable insights that improve email performance over time.

- Track recurring patterns across similar audience segments or offer types

- Curate learnings in a testing log or internal library to share with your team

- Build on what works: Use winning elements as your new baseline for the next test

Great testing teams don’t just pick better subject lines. They build a learning engine that compiles insights into email strategy quality over time.

6. Document Actionable Outcomes

Each test should conclude with a clear lesson. Win or lose. Capture that lesson in standardized form to preserve institutional knowledge and reduce re-testing the same ideas later.

| Field | Details to Capture |

|---|---|

| Hypothesis | What you tested and why |

| Test Variable | Which single element was changed |

| Primary Metric | The KPI used to judge success |

| Outcome | Result + statistical significance |

| Next Action | How findings will be applied in future sends |

Data is only power when it leads to repeatable improvement.

7. Build Continuity Into Your Testing Process

Testing isn’t something you ‘complete’. It’s a recurring practice. Allow each A/B test to inform the next. For example:

- If a subject line with curiosity outperformed urgency, test more curiosity formats next

- If a product image layout improved clicks, apply it across other collections

- If personalization increased RPR, test deeper zero party data use in future flows

Continuous optimization is how email evolves from marketing channel to revenue engine.

Each test is an input. How you respond is what drives performance forward.

Integrating A/B Testing Best Practices into Your Ongoing Email Strategy

You can’t improve what you don’t consistently test. A/B testing isn’t a campaign add-on. It’s a process that needs to be built into the backbone of your email marketing engine. When done routinely, structured testing turns your email program from a guessing game into a predictable growth lever.

Make Testing a Habit, Not a One-Off

Testing shouldn’t be reserved for launches, holidays, or when engagement drops. It should be woven into the regular rhythm of your campaign calendar. Here’s how to standardize the process so insights accumulate over time:

- Assign a testing slot in every weekly or monthly email deployment plan

- Rotate focus areas (subject line, layout, CTA, send time) across different campaigns

- Dedicate resources for hypothesis creation, test setup, and analysis in your workflow

The goal is to normalize small, repeatable improvements. Not chase giant wins every time.

Create a Test Repository

Every test teaches something. Capture it. As your team runs more experiments, you’ll develop a library of learnings that inform future creative, copy, and timing decisions.

Use a standardized format to record tests, such as:

- Test variable and versions

- Audience segment and list criteria

- Performance against the primary metric

- Winning version and how it was applied

This archive becomes your strategic blueprint, so new campaigns start smarter every time.

Build Testing Into Campaign Workflows

To make testing routine, integrate it into the same processes that produce each campaign. That includes:

- Brief templates: Add a field for testing objective and variable

- Review checklists: Include test setup confirmation as a QA step

- Reporting cycles: Bake test analysis into regular reporting meetings

If it’s not integrated, it won’t be consistent. Operationalize testing instead of treating it like an experiment every time.

Use Testing to Improve Workflow Efficiency

Testing doesn’t just help you write better emails. It helps you write fewer variations that matter more. It removes the guesswork and back-and-forth by creating evidence-based standards for what works.

- Know which subject line style performs best so you don’t debate it every send

- Create reusable layouts driven by click heatmaps or CTR results

- Automate winning versions into flows and sequences

Testing clarifies where to spend creative effort and where to stick with what works.

Prioritize Impactful Test Areas Over “New Ideas”

Not every test is worth your time. Focus on variables that affect subscriber decisions, not just surface-level tweaks. Prioritize tests tied to performance gains in:

- Open behavior: Subject lines, sender name, personalization

- Click behavior: CTA design, product placement, mobile layout

- Conversion behavior: Offer structure, urgency cues, post-click experience

The best testing strategies target leverage points that influence revenue and buyer movement, not vanity wins.

Turn Campaign Learnings Into Automated Wins

Once strong patterns emerge, translate high-performing elements into your flows and automations.

- Winning CTAs get embedded into abandoned cart templates

- Effective layouts replace older formats in promo flows

- Personalization tactics expand across welcome and post-purchase touchpoints

You don’t just build better broadcasts. You reshape your automated system with proof-backed improvements.

Designate a Testing Owner or Steward

To keep testing consistent, make someone responsible for it. That person (or role) leads planning, logging, and follow-through. Their role is to:

- Identify which campaigns should include A/B tests

- Document outcomes and maintain the test log

- Share insights across teams and marketing channels

Consistency isn’t a product of good intentions. It’s a product of ownership.

Routinize Testing With Repeatable Frameworks

Create predictable routines and reusable tools so A/B testing doesn’t require reinvention. A solid framework might include:

- A rolling test calendar based on campaign volume

- A standardized testing brief format

- A centralized doc for logging past experiments, key outcomes, and future ideas

If you’re running 1–2 tests per week, you’ll finish the year with over [insert count] decisions grounded in data instead of assumptions.

That’s how A/B testing becomes a strategic engine, not just a tactic.

Addressing Common Challenges and Mistakes in A/B Testing for Email Marketing

Every email marketer hits a wall eventually. You run a smart-looking A/B test, and the results either contradict logic, produce no clear winner, or nudge you in the wrong direction. The problem usually isn’t the tactic. It’s how it’s executed.

Even small errors can lead to false conclusions, wasted reach, and lost revenue. Let’s break down the most frequent issues and how to avoid each with precision.

1. Insufficient Sample Size

You can’t trust results from an underpowered test. A sample that’s too small creates misleading data swings and prevents statistically significant outcomes.

- Problem: Declaring winners with only a few dozen recipients per version

- Fix: Set a minimum threshold for recipients per variant (based on expected conversion rate and confidence level). Wait until that threshold is reached before analyzing results.

- Pro Tip: If your list is too small for traditional A/B testing, use multi-send strategies and aggregate results over time.

2. Testing Too Many Variables at Once

Multivariate testing sounds smart. But muddles answers. If you change the subject line, layout, and CTA together, how do you know which one drove performance?

- Problem: Creative teams bundle multiple changes to “kill two birds”, but end up with no directional clarity

- Fix: Isolate one variable per test. Once a winner is found, use it as a new control in the next test round

Clarity beats speed when it comes to measuring success.

3. Declaring Winners Too Early

Early engagement can be misleading. Highly active users often respond in the first few hours, while lagging behaviors (especially conversions) may take longer to register.

- Problem: Ending a test based on a midday click-through bump or day-one open rate

- Fix: Set a minimum test duration before review. Wait until audience engagement stabilizes based on past behavior patterns

- Reminder: Conversions often lag clicks. Give post-click actions time to complete

Test rules should be set before you launch, not while watching results roll in.

4. Ignoring External Variables

Testing in a vacuum doesn’t reflect real-world buyer behavior. Campaign context, such as discount events, holidays, or site downtime, can skew test performance in unpredictable ways.

- Problem: A high-performing subject line during Black Friday doesn’t mean it will succeed in February

- Fix: Document environmental conditions in your test log. Tag results by campaign type, season, and promotions

- Better yet: Rerun promising tests in non-peak periods to validate staying power

Contextual awareness makes results applicable. Not just interesting.

5. Running Overlapping Tests

Too many simultaneous tests can poison your data. If multiple experiments target the same segment at once, results influence one another (and produce unreliable conclusions).

- Problem: Running a subject line test while also testing layout on a triggered flow to the same audience

- Fix: Schedule tests to avoid overlap. Prioritize tests by objective and keep test groups discrete

Cross-test contamination is hard to spot, but easy to prevent with a testing calendar.

6. Misinterpreting Secondary Metrics

Stick to your hypothesis. A winning CTA test shouldn’t be judged on open rates. Only your primary metric determines the outcome.

- Problem: Calling version B the winner because it generated more clicks, despite testing for conversions

- Fix: Choose a primary metric specific to your test objective. Track secondary data, but don’t let it sway judgment

Misaligned metrics produce false positives. Stay focused on the results that support your hypothesis.

7. Failing to Record What Was Learned

Testing without documentation is testing on repeat. Without a unified test archive, teams revisit old ideas, miss pattern recognition, and underuse what already works.

- Problem: No centralized system for logging what was tested and what worked

- Fix: Use a simple spreadsheet or shared repository to track hypotheses, variables, metrics, outcomes, and final decisions

- Example columns: Campaign date, audience segment, variable tested, result, notes for future application

A clear record helps avoid redundant testing and makes every outcome more useful.

8. Misaligning the Test With Business Strategy

Don’t test in isolation. Every A/B test should map to a meaningful business goal—whether it’s increasing CLV, driving first purchase, or reducing churn.

- Problem: Testing design tweaks without strategy alignment

- Fix: Tie every variable tested to a customer behavior or financial lever. Document that connection as part of your hypothesis

A test without strategic value is just busywork.

Before You Run Any Test, Run Through This QA

- Do I have a clear hypothesis tied to a primary metric?

- Is my sample size big enough to deliver significance?

- Am I testing only one variable?

- Have I documented external factors or promotions?

- Is tracking confirmed for all outcomes?

- Will I wait the full test period before deciding?

- Have I checked for other overlapping tests?

Anything less increases noise, not insight.

Better Testing Means Better Email. Better Email Means Better Revenue.

A/B testing for ecommerce email only works when it’s disciplined. That means eliminating guesswork, building a consistent framework, and protecting your data from misinterpretation.

The more careful your process, the more confident your marketing decisions.

Best Tools for A/B Testing Ecommerce Emails

| Tool | What It Offers |

|---|---|

| Klaviyo | Easy A/B setup for flows & campaigns |

| Omnisend | Drag-and-drop A/B builder |

| ActiveCampaign | Deep insights + automation testing |

| Mailchimp | Simple A/B features for subject lines |

| Email on Acid | Rendering + test insights |

FAQs: A/B Testing for Ecommerce ROI

1. How often should I A/B test?

At least once per major campaign or new flow.

2. What’s the best thing to test first?

Subject lines, then CTA buttons or layout.

3. Can I test emails inside flows?

Yes—Klaviyo and Omnisend let you test within automation.

4. How do I know if the test is valid?

Use a large enough audience, random splits, and let it run for 24–48 hours.

5. Should I always go with the winner?

Yes, if the lift is meaningful. But re-test occasionally—audiences evolve.

Test Smarter, Earn More

A/B testing takes the guesswork out of your ecommerce email strategy. By testing strategically and tracking the right data, you’re not just hoping for results—you’re engineering them.

At The Mail Effect, we help ecommerce brands plan, execute, and scale email A/B testing that leads to measurable sales growth.